Running a Local LLM

LLMs are becoming very popular. The possibilities are endless with these LLMs. One danger is that all your private data is used by the companies hosting these LLMs. Therefore, it would be good to know how you can run a local LLM.

One popular local framework for running LLMs is Ollama. With Ollama, you can select an Open Source LLM, download it and then run it on your local machine. And the best thing is that only a little tweak is needed from moving from a LLM cloud provider to Ollama as their API mimics one of the most widely used APIs.

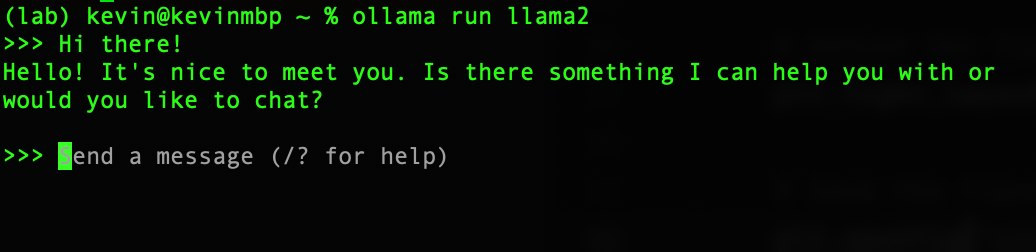

Let's start by downloading and running the popular Llama2 model. This can be done by executing the following command in your terminal (after installing Ollama):

ollama run llama2

Ollama will also become available as a webservice at http://localhost:11434. But suppose you would like to run an application developed on top of Python with the openai package on Ollama, how can we do that?

LiteLLM proxy

We can use LiteLLM to serve as a proxy for the openai package. First, we would need to install LiteLLM with pip install litellm. Then we can use the litellm package just like we would use the openai package:

from litellm import completion

response = completion(

model="ollama/llama2",

messages=[{"content": "Hi there!","role": "user"}],

api_base="http://localhost:11434"

)

print(response)It is just like how you would use the OpenAI package, but now for free and on your local machine.

Conclusion

Large LLM cloud providers get more and more information as more people use their services. With Local LLMs, we can stay in charge of our own data and achieve the same results at a much lower prise.